As everyone (well, probably almost everyone) interested in Natural Language Processing, I have started playing with deep learning. Deep learning (which is basically a new name for neural networks allows a computer to automatically learn to perform a task, given a (usually huge) number of examples.

I've experimented with a powerful software called Open NMT. If you have a task which involves taking a text as entry, and producing another text as an output, and a large corpus for training, you can try teaching it to Open NMT.

This very general description covers lots of different tasks. For instance:

- translation is one such task. Take an English sentence, produce a French sentence.

- summary is another: the entry is the text to summarise, the output is the summarised text.

- all kinds of annotations may be considered this way too: the entry is the raw text, the output is the annotated text (but in the case of annotations, other architectures might be more straightforward)

- for egyptology, transliteration can be thought as a text transformation. You take the Manuel de Codage text as an entry, and you output the transliterated text.

I was wondering about what we could do with the Ramsès database. It's large, but not as huge as the corpora which are usually used for machine learning (Ramsès has currently approximatively half a million words, whereas the sample corpus for german-english translation used by OpenNMT is 4500000 sentences long). My first idea was to check if our data was large enough for training.

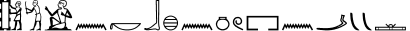

It turns out that, for transliteration, the result was way better than I expected. Some work and analysis are needed to improve it, but, frankly, I was amazed by the first outputs. I intend to write more developed articles about it, but I’ll give here a few examples. The transliterations below have been generated automatically, and the system had never seen the exact same sentence (note that those are Late Egyptian texts)

qd=j n =k bḫn n-mꜣw.t

Note that the system produces a transliteration complete with word separations, and

grammatical annotations (= for suffix pronouns, « - » in some compound nouns).

qd=j n =k bḫn n-mꜣw.t

Note that the system produces a transliteration complete with word separations, and

grammatical annotations (= for suffix pronouns, « - » in some compound nouns).

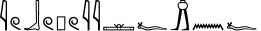

jw bwpw=f jnj.t=f

jw bwpw=f jnj.t=f

The remarkable fact is that, although the ".t" of the infinitive after bwpw is not written, the system has inferred it was needed after the negation.

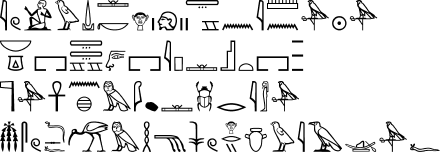

iw=j wḏꜣ.kwj ḥr-tp tA n jmn-rꜥ nb ns.t tꜣ.wy ḫnty jp s.t

nṯr ꜥnx m mꜣꜥ.t ḫprw msw ḏs=f gmḥ sw ḥry-ib wjꜣ=f

ok, in this case, the system missed the ".t" and the plural

of ip.t-s.wt, and misread ms sw ḏs=f as msw ḏs=f (and, as the

text is a bit complex, it also misunderstood gmḥsw, which is a bird

of prey - and here an epithet of Amun - but is actually written as gmḥ sw).

iw=j wḏꜣ.kwj ḥr-tp tA n jmn-rꜥ nb ns.t tꜣ.wy ḫnty jp s.t

nṯr ꜥnx m mꜣꜥ.t ḫprw msw ḏs=f gmḥ sw ḥry-ib wjꜣ=f

ok, in this case, the system missed the ".t" and the plural

of ip.t-s.wt, and misread ms sw ḏs=f as msw ḏs=f (and, as the

text is a bit complex, it also misunderstood gmḥsw, which is a bird

of prey - and here an epithet of Amun - but is actually written as gmḥ sw).

There are of course cases when the system doesn't work as well as here, but overall it's quite impressive. To reassure egyptologists, the system makes very basic and stupid mistakes. Basically, he is doing some kind of sophisticated pattern recognition, which allows it to propose a "best match" for transliteration. Occasionaly, this involves quite complex stuff, like in "jn.t=f" above. But at the same time, there is absolutely no reasonning behind it. It can miss obvious solutions, and it ignores a sign or completely misinterpret it.